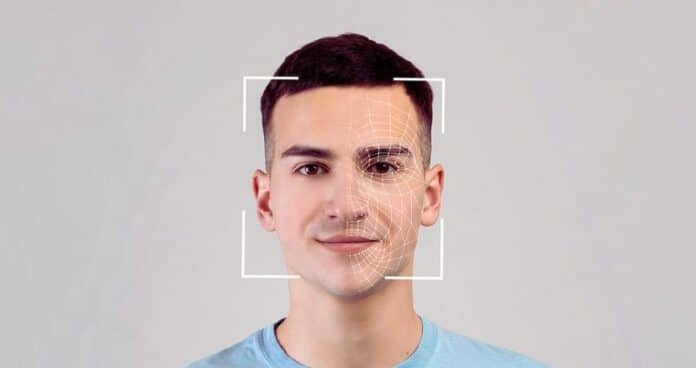

The Deepfake Detection Challenge (DFDC) is a competition organized by AWS, Meta/Facebook, Microsoft, and the Partnership on AI to develop new methods for identifying deepfakes and manipulated media.

Deepfakes are videos or audio recordings that have been manipulated to make it appear as if someone is saying or doing something they never said or did.

The DFDC dataset consists of over 100,000 videos, including both real and manipulated.

Deepfake Detection Challenge (DFDC) Counters Dangerous Technology

The term “deepfake” comes from combining “deep learning” and “fake”, as deep learning is a branch of artificial intelligence (AI) that enables computers to learn from data and generate realistic outputs.

Deepfake technology is being used to create fabricated but convincing media to serve variety of purposes. Some of it is for the collective good but the actual challenge resides in the bad.

It can be alright in a sense of harmless entertainment stuff such as creating digital avatars for effortless campaign. However, it becomes highly problematic when the technology contributes to misinformation and harassment online.

Deepfakes have also become a popular window for political opponents to attack each other. For example, before US elections 2020, a bogus video of House Speaker Nancy Pelosi went viral in which she appeared drunk while giving speech. The video was retweeted by then President Donald Trump to manufacture a controversy.

Deepfakes pose serious threats to the credibility, privacy and security of individuals and society, especially on social media platforms where they spread like wildfire.

It’s impact on social media users can be devastating. They can erode trust in information sources, damage reputations, and relationships. Moreover, it is also helps in inciting violence and hatred, influence elections and public opinion, extort money and blackmail victims, and violate human rights and dignity.

Benefits of DFDC Dataset

To combat this growing threat, researchers are actively developing methods to detect and mitigate the spread of deepfakes. The DFDC dataset offers numerous benefits, including its size, variety, labeling, and open-source availability, which contribute to enhancing the accuracy and effectiveness of deepfake detection models.

Size and Diversity

The DFDC dataset stands out as one of the largest and most diverse collections of manipulated media currently available. This extensive dataset provides researchers with ample opportunities to train and evaluate their deepfake detection models using a wide range of deepfakes. By exposing models to various manipulation techniques and scenarios, researchers can refine their algorithms to detect deepfakes accurately. The sheer scale of the dataset ensures that the models are well-prepared to handle real-world challenges effectively.

Variety of Deepfake Techniques

In addition to its size, the DFDC dataset encompasses a diverse array of deepfake techniques. These techniques include face swapping, voice swapping, and lip-syncing, among others. By incorporating multiple manipulation techniques into the dataset, researchers can develop robust deepfake detection models that are capable of detecting a wide spectrum of deceptive content. This versatility enables the models to remain effective even as deepfake techniques continue to evolve and become more sophisticated.

Labeling and Supervised Learning

The DFDC dataset is meticulously labeled, with each video classified as either real or manipulated. This labeling enables researchers to adopt a supervised learning approach, which is the most common and effective method for deepfake detection. By training deepfake detection models on labeled data, researchers can guide the models to identify key visual and auditory indicators of manipulation. Consequently, this labeling process facilitates the development of accurate and reliable deepfake detection algorithms.

Open-Source Availability

An additional advantage of the DFDC dataset is its open-source nature, meaning it is freely accessible to researchers. The availability of the dataset eliminates barriers to entry, allowing researchers from diverse backgrounds to use the dataset and contribute to the advancement of deepfake detection. This openness fosters collaboration, encourages the sharing of research findings, and accelerates progress in combating the spread of deepfakes.

Training and Testing

The DFDC dataset serves as an invaluable resource for training and testing deepfake detection models. The dataset’s size and diversity enable researchers to develop robust models that can accurately identify manipulated media across various contexts.

Identifying New Detection Methods

Researchers can leverage the DFDC dataset to identify and explore novel deepfake detection methods. By testing different approaches on the dataset, researchers can assess their effectiveness and refine their techniques, thereby contributing to the ongoing evolution of deepfake detection.

Improving Existing Detection Methods

The DFDC dataset also allows researchers to enhance the accuracy and effectiveness of existing deepfake detection methods. By training these methods on the dataset, researchers can fine-tune their models, leading to improved detection rates and reduced false positives.

Methods of Deepfake Detection

Deepfake detection is a challenging problem, but DFDC promises to help make significant progress. As the research community continues to improve the accuracy of deepfake detection, here are some of the methods that have been used.

Convolutional Neural Networks (CNNs)

CNNs are a type of deep learning algorithm that have been used for a variety of tasks, including image classification and object detection. CNNs can be used to identify features in images that are indicative of deepfakes, such as unnatural skin texture or lighting.

Optical Flow

Optical flow is a technique for tracking the movement of objects in a video. Optical flow can be used to identify frames in a video that have been manipulated, as the movement of objects in those frames will be inconsistent with the movement of objects in the surrounding frames.

Audio Analysis

Audio analysis can be used to identify deepfakes that have been created using voice swapping. Audio analysis can identify features in the voice that are indicative of deepfakes, such as unnatural pitch or timbre.

A Collaborative Environment

In addition to the DFDC, there are a number of other initiatives that are working to address the same problem. These initiatives include:

The Partnership on AI’s Media Integrity Initiative

The Partnership on AI’s Media Integrity Initiative is a coalition of technology companies, academic institutions, and non-profits that is working to develop tools and best practices for detecting and mitigating the impact of deepfakes.

The IEEE Technical Committee on Countering Deepfakes

The IEEE Technical Committee on Countering Deepfakes is a group of experts from academia, industry, and government who are working to develop standards and best practices for countering deepfakes.

The European Union’s Deepfake Detection Project

The European Union’s Deepfake Detection Project is a project that is developing tools and methods for detecting deepfakes.

These initiatives are all working to address the rapidly growing problem of deepfakes. Only by working together efficiently, they can help to make deepfakes less effective and more easily detectable.