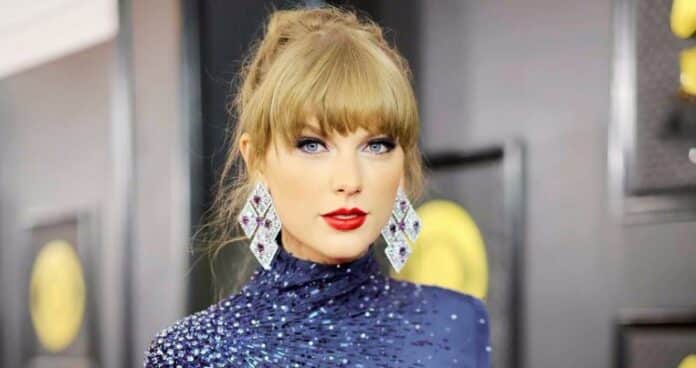

Social media platform X has taken a significant step by blocking searches for Taylor Swift following the circulation of explicit AI-generated images of the singer on the site. Joe Benarroch, X’s head of business operations, told the media that this move is a “temporary action” aimed at prioritizing safety.

Users attempting to search for the singer on the platform now encounter a message stating, “Oops, Something went wrong” The explicit AI-generated images of the singer gained widespread attention earlier this week, going viral and amassing millions of views. This prompted concerns from both US officials and the singer’s fanbase.

Taylor Swift Fans Take Matter into Their Own Hands

The artist’s fans took swift action by flagging posts and accounts sharing the fake images. They flooded the platform with genuine images and videos of the singer, using the hashtag “protect Taylor Swift”. In response, X, formerly known as Twitter, issued a statement on Friday, emphasizing that posting non-consensual nudity on the platform is “strictly prohibited”.

The statement declared a zero-tolerance policy, with teams actively working to remove identified images and take appropriate actions against the responsible accounts.

X attributed the blockade to its ongoing efforts to combat the spread of harmful content. Prior to the search blockade, the platform identified a surge in the circulation of manipulated, sexually suggestive images of Taylor Swift. These “deepfakes” are a concerning form of online abuse and harassment, particularly when directed at high-profile individuals.

There’s no doubt that Taylor Swift’s fans played a crucial role in bringing the deepfakes to light. Their vocal condemnation and reporting of the harmful content alerted both X and the public to the problem.

How Else Could X have Responded to the Issue?

While X hasn’t officially disclosed the specific algorithms or metrics used in identifying this content, the company stated that the temporary search blockade prioritized user safety and aimed to minimize exposure to these harmful images. This decision aligns with X’s stated policy of prohibiting non-consensual nudity and taking “appropriate actions” against accounts responsible for posting such content.

X’s approach has also received criticism. Some argue that the company’s blanket blockade on Swift-related searches represents an overly broad and potentially censorship-like measure. They argued that the social media giant could have targeted only responsible accounts without completely restricting access to information about the singer.

Growing Concern Calls for New Laws

The matter attracted the attention of the White House, which deemed the spread of AI-generated photos as “alarming.” During a briefing, White House press secretary Karine Jean-Pierre expressed concern over the disproportionate impact on women and girls, advocating for legislation to address the misuse of AI technology on social media. Jean-Pierre emphasized the role of platforms in enforcing rules to prevent the dissemination of misinformation and non-consensual, intimate imagery.

In response to the growing concern, some US politicians are calling for new laws to criminalize the creation of deepfake images. Deepfakes, which use artificial intelligence to manipulate someone’s face or body in a video, have seen a 550% increase in creation since 2019, according to a 2023 study. While there are currently no federal laws against the sharing or creation of deepfake images in the US, there have been initiatives at the state level to address this issue. In the UK, the sharing of deepfake pornography was made illegal as part of the Online Safety Act in 2023.