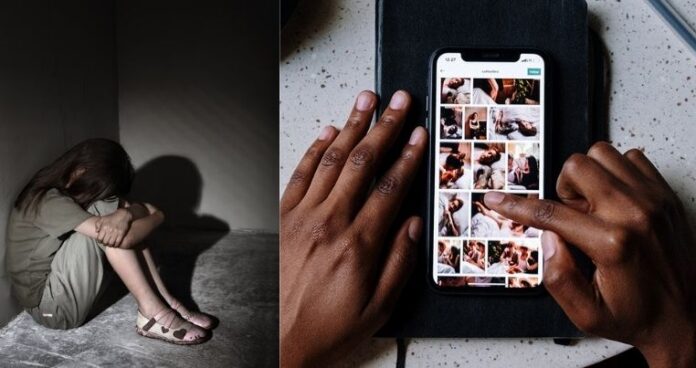

Security researchers have voiced concern over Apple scanning iPhones for Child Sex Abuse Material (CSAM) could be a new trick to conduct mass surveillance. The tech giant released a technical summary of a system that will find and report child sex content on the phones of American customers. Experts worry that this technology can be expanded to work as a free backdoor for government agencies to spy on their citizens and do anything with their private content. Any prohibited content or political views on someone’s phone can irk the government to target that customer.

Apple claimed that this tool will help reduce the spread of child pornography and images of child abuse by using cryptography. The infamous NSA whistleblower Edward Snowden also slammed Apple for turning iPhone into an iNarc device.

How Apple Scanning iPhones is a Problem for Security Advocates?

Where some American citizens agree that agencies might have good reason to spy on them, a majority of users flooded social media with opposite concerns. They claim that this new technology by Apple is merely marketed as something that will reduce child abuse. On the other hand, it has also created an opportunity for violating the privacy of an iPhone owner. While searching for CSAM, an overseer can also find the private nude pictures of Apple customers, waiting to be exploited.

Folks are worried about Apple scanning iPhones because other companies can also be inspired to soon follow suit with some other narrative.

How Does this new technology Work?

The tech giant explained that a human reviewer will assess the content before it is stored in the iCloud with the help of a system that will cross-reference that content with a mysterious blacklist of similar stuff. This list of CSAM is reportedly compiled by US National Center for Missing and Exploited Children (NCMEC). Users’ devices will automatically store this blinded database and determine whenever a photo matches the one in it. It reportedly flags the content only when it matches the images in a given database. Users cannot learn what picture is being matched with what unless they have knowledge about the server-side blinding secrets that only Apple knows. The system is also able to catch the edited version of those images in the list.

Apple reassured that this system will not focus on other private content of the user and only stay limited to the images matching the ones in the list. It also claimed that the software has less chance of flagging any content or account incorrectly. Apple maintains that it will manually review the images and if there is any match then it will report to law enforcement authorities and disable the user’s account.

Inaccuracy in Machine Learning

The statement for defending Apple scanning iPhones did not go well with tech experts. They are perfectly aware of weaknesses in machine learning systems which is why they have been raising their voice. The images in the secret list are basically hashes of the child abuse photo which are unreadable by the device. Apple uses the server-side blinding secret to transform these hashes which can also involve collisions with other pictures. As discussed above, only Apple knows about this technology which does not make it reliable for many tech experts. Any picture in a user’s phone can have a collision with child porn in the database.

There have been many instances in the past where technology was not able to catch the actual criminal. One of them was Apple, when it tried to catch a thief through facial recognition but ending up reporting the wrong person. The guy then sued apple for 1 billion USD for the wrongful arrest.